Technical Overview of Dome Projection

|

|

|

|

|

|

|

|

The following is a technical presentation of what is involved in dome projections for venues such as planetarium and geodesic domes. Digital and video projection for domes is known as fulldome projection, dating back to the early eighties.

This is an excerpt from a seminar presentation made by Paul Bourke of Swinburne University, Melbourne, Australia. Mr. Bourke is an expert in the field.

Why a hemispherical screen?

Historically it is the natural way to represent the night sky, 180 degrees x 180 degrees.

Peripheral vision is one of the capabilities of our visual system that is not engaged when looking at standard at or small displays.

For all practical purposes our horizontal field of view is 180 degrees, vertical field of view is approximately 120

degrees.

Note we don’t necessarily see colour or high definition in our extreme horizontal field, it has evolved to be a strong motion detection mechanism. Our visual system does “fill in” the colour information for us.

A hemispherical dome allows our entire visual field (vertically and horizontally) to be filled with digital content.

We are used to seeing the frame of the image which anchors the virtual world within our real world. In a dome one often doesn’t see that reference frame.

The “magical” thing happens when one doesn’t see the dome surface, more common in high quality domes with good colour reproduction. Our visual system, without any physical world frame of reference, is very willing to interpret

representations of 3D worlds as having depth.

Dome projection

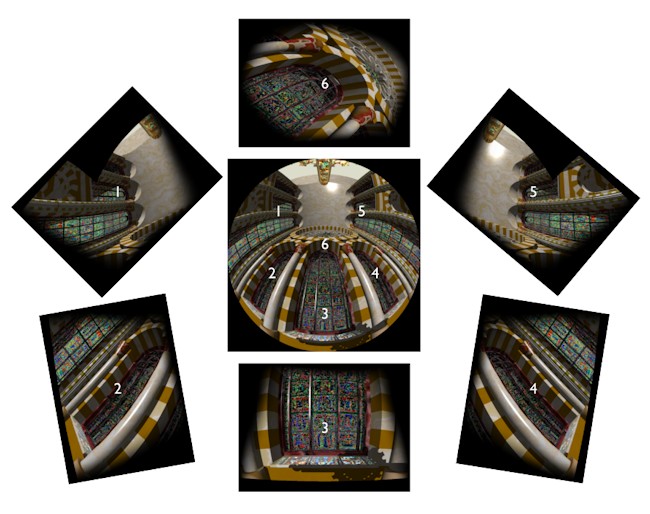

- Projection options listed roughly in order of resolution.

- Single projector

- Fisheye lens

- Truncated fisheye lens (see later)

- Spherical mirror

- Twin projectors with partial fisheye lenses.

- Note that fisheye systems typically need to occupy the center of the dome.

- Multiple (typically 5-7) projectors located around the rim of the dome. The images are blended together to form a

continuous image.

- As content developer one need not be (too) concerned with the projection technology.

Introduction to perspective theory

Introduction to perspective theory

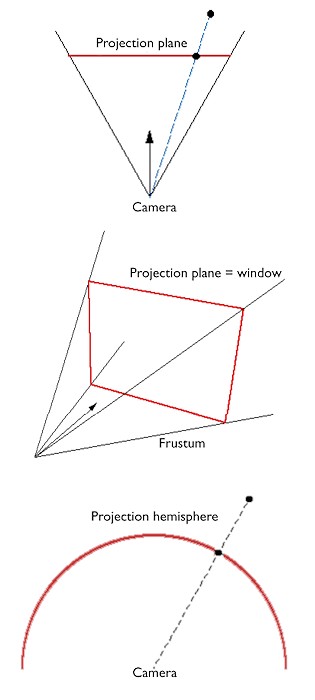

- Fundamentals of a perspective projection, the point on the Projection plane projection plane is the intersection of a line from the 3D object to the camera.

- Model of looking through a window.

- A perspective projection is the simplest that captures the required field of view for presentation onto a rectangular region on a plane.

- Why isn’t a perspective projection sufficient for a dome?

- Doesn’t capture the field of view required.

- Not efficient to create a perspective projection > 120 degrees.

- Intersection of world objects with a sphere defines a fisheye or Projection hemisphere spherical projection.

- Fisheye projection is the simplest that captures the required field of view for subsequent presentation onto a hemispherical surface.

Mathematics of a fisheye image

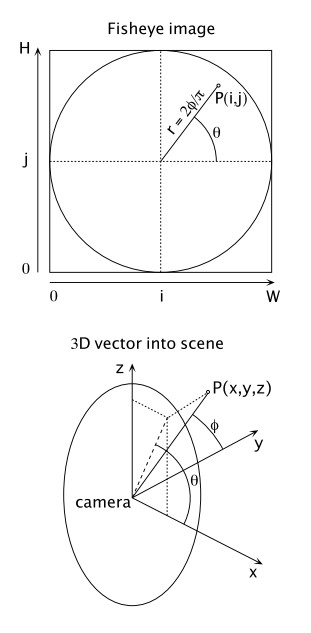

- 1. Given a point P(i,j) on the fisheye image (in normalised image coordinates), what is the vector P(x,y,z) into the scene?

r = sqrt(Pi2 + Pj2)

theta = atan2(Pj,Pi)

= r / 2

Px = sin( ) cos( )

Py = cos( )

Pz = sin( ) sin( )

- 2. Given a point P(x,y,z) in world coordinates what is the position P(i,j) on the fisheye image?

= atan2(sqrt(Px2 + Pz2), Py)

= atan2(Pz, Px)

r = / ( / 2)

Pi = r cos( )

Pj = r sin( )

- Traditional to limit the fisheye image to a circle but it is defined outside the circle.

Fulldome video: Standards

- 30fps is the norm (actually 30, not 29.97 of NTSC). Square pixels.

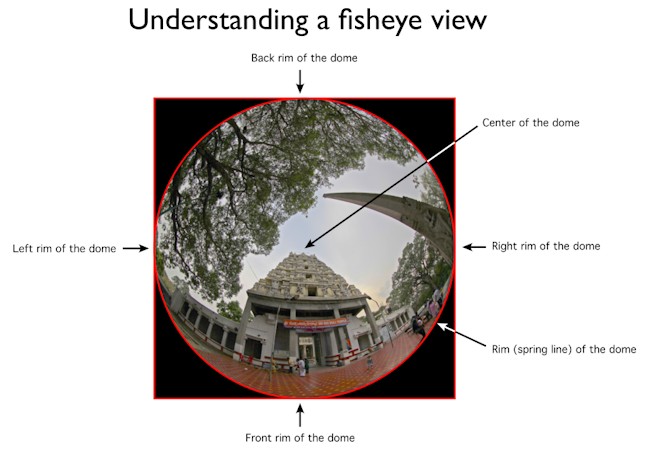

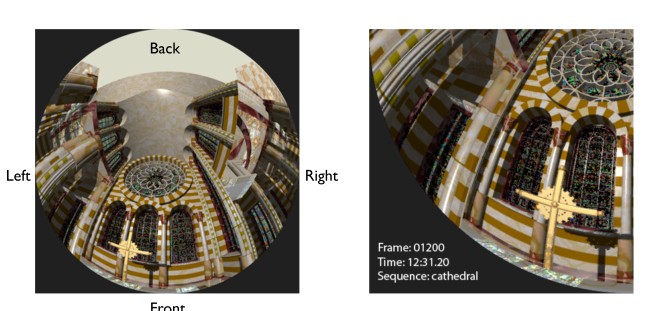

- Orientation of the fisheye frame, see image bottom-left.

- Unfortunately no good gamma or colour space specifications (gamma, white point, temperature).

- Audio requirements are quite variable between installations, from simple stereo in small domes to 7.1 systems.

- Not uncommon to place information within the unused portions of the circular fisheye frame. Eg: frame number, sequence

Resolution: single projector systems

- Resolution for more single projector systems is between 1024 (1K) to 2048 (2K) pixels.

- Square pixels, re ects the CG (instead of filmed) history of fulldome.

- Progressive frames, interlaced has never been used.

- Small planetariums with a low end fisheye system may not be able to represent more than a 1K square fisheye. Some

- single projector systems can represent 2K.

- Spherical mirror content with a good HD projector can represent up to 1600 pixels.

- Difficult to compare resolution between some technologies, the systems quoted resolution is generally not the same as the actual available/perceived resolution.

- Content normally supplied as movies, QuickTime or some MPEG variant.

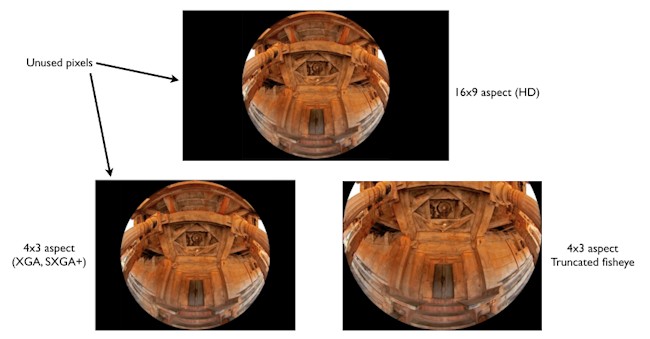

Dome projection: truncated fisheye

For fisheye lens projection the circular fisheye image needs to be inscribed within the projector frame. This can be very inefficient use of pixels, most are not used. Especially true for current HD projectors.

Resolution: multiple projector systems

- Frame resolution for multiple projector systems has been increasing from 2400, 3200, 3600, 4096 (4K).

- Most productions now mastered at 4K pixels square = 16 MPixels.

- Expectation by planetariums who have invested in high end systems to receive material at that resolution.

- Some planetariums today can present 8K square images.

- Difficult to compare resolution between some technologies, the systems quoted resolution is generally not the same as the actual available/perceived resolution. For example: edgeblending is rarely perfect, the edge-blended seam often looks “soft”.

- Content normally supplied as individual uncompressed frames (TGA, PNG, JPEG at 100%) along with a matching length soundtrack.

- Important to realise that in all cases content is supplied as fisheye images, anything that needs to be done to those to make them playable is the responsibility of the installation. Generally only they will have the software and the calibration information necessary.

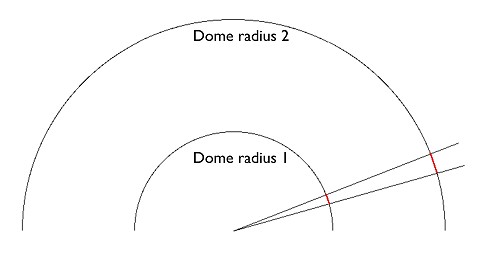

Resolution

- Interesting to consider that for a given image resolution the size of the dome does not change the perceived resolution. The angle a pixel subtends at the eye is the same irrespective of the dome radius.

- Difficult to compare resolution between some technologies, for example, current digital projectors (if in focus) can result in individually resolved pixels on the dome, this is not necessarily the case for analog CRT systems.

- How does one compare resolution when there are screen door effects on some projectors.

- Some knowledge of the dome resolution of the target system is relevant to content production as it determines the finest detail that can be represented.

- More important than resolution is how it is used! Good content can hide low resolution projection

- Cannot necessarily create high definition 4K fisheye images and expect to be able to down sample for a lower resolution installation. Especially true for high definition content converted to single projector systems, fine detail can be lost in the antialiasing that occurs in a downsampling process.

- The same applies to detail within 3D models, fine detail that can be resolved in a 4K render may not be resolved in a 1K render due to aliasing effects.

- Resolution on the dome does not necessarily have a 1:1 correspondence with the resolution of the source fisheye images, for example most system employ lossy compression codecs.

- Estimates vary but the human visual system can resolve down to around 3 arc minutes. Surface area of a hemisphere is 2 pi r^2 so 3 arc minute resolution needs about 13 MPixels.

- Opinions vary regarding the importance of ultra high resolution compared to the story telling component/skill. At what stage does the resolution not become the determining factor to the experience?

- Current discussion as to whether 60Hz frame rates would be of greater benefit than high resolution

Computer graphics

- Most animation/rendering packages support a circular fisheye lens or there is an external plugin.

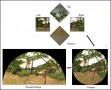

- Where this isn’t the case the most common approach is to render cubic maps and post process those to form a fisheye.

- Can choose to render between 4 and 6 faces of the cube to and postprocess to generate a fisheye.

- An implication to animators is they need to model more of the world than they might normally do because of the wider field of view

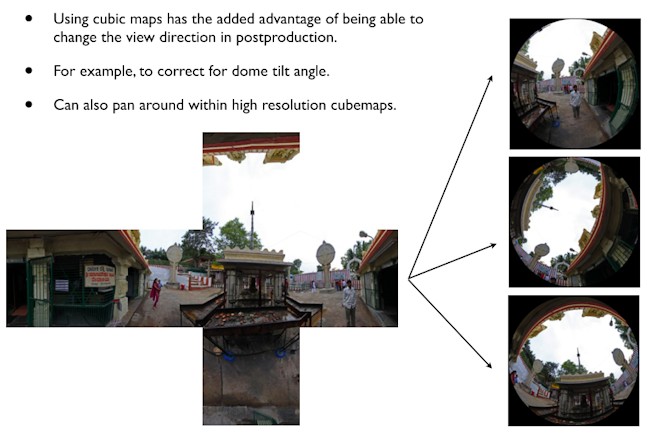

- Using cubic maps has the added advantage of being able to change the view direction in post-production.

- For example, to correct for dome tilt angle.

- Can also pan around within high resolution cubemaps.